Tracking obstacles for a humanoid robot

For a university project in 2016 I worked on computer vision for a humanoid robot named LOLA, which had already been in development for several years at the Chair of Applied Mechanics at TU Munich.

For environmental perception and navigation around and over obstacles, an Asus Xtion PRO LIVE Kinect-like RGB-D camera had been installed on LOLA’s “head”. This type of camera outputs a 3D image of surfaces in the field of view, also referred to as a point cloud. My task was to detect and track obstacles in real time from the point cloud and fit primitive SSV1 collision volumes around them, as well as to estimate a velocity vector from moving obstacles.

Just to be clear, we didn’t need to do actual object recognition or classification. We more or less just cared about fitting collision models over arbitrary clusters of points, and track them over time. Of course such clusters of points may “belong” to several different actual objects, if the objects are close to each other. Also, the floor of the point cloud is already getting removed by a previous detection step.

The research group had previously assumed a static enviroment for robot navigation. Now we also wanted to track moving obstacles, such as a ball rolling across the floor, predict the (linear) motion and have LOLA react in time accordingly. For this requirement, we had to consider that a) already separate moving obstacles needed to be kept separate, even when briefly touching each other and b) moving objects previously attached some other static obstacle in the point cloud needed to be split into separate, individually tracked obstacles. Further, noise and sporadic outliers from the sensor or the floor-removal step should not affect the obstacle approximations too heavily.

Long story short, after a bunch of research and experimentation I ended up developing an efficient method that used some existing methods in combination with my own ideas. One half of this method uses a probabilistic approach that operates by continually fitting a variable Gaussian Mixture Model (GMM) over time using the Expectation Maximization (EM) algorithm, but with added regularization/filtering. The other half has a non-probabilistic portion entailing a coarse 3D voxelization and voxel-clustering step that was needed to detect structures in the point cloud splitting up, as well as to detect new obstacles entering the frame (among other things).

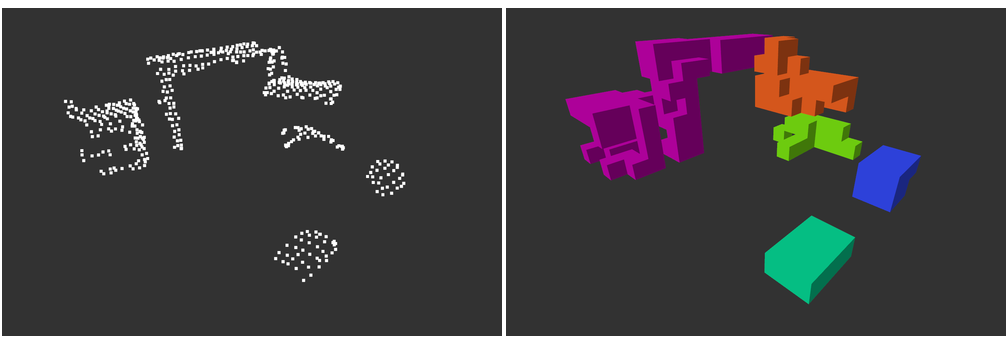

One frame from the downsampled point cloud on the left and the voxelization to the right. Connected voxels are clustered together, indicated by the different colors.

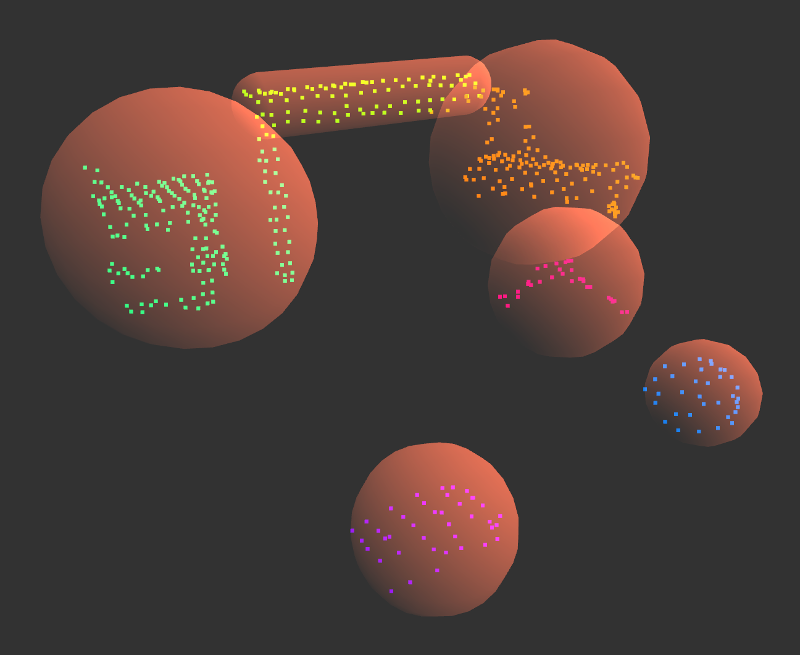

Using the data of the GMM, the SSVs could be aligned to the eigenvectors with largest eigenvalues of the covariance matrices and sized to contain all hard-assigned points.

SSV approximation of a scene with 5 spheres and one capsule.

For velocity estimation and positional filtering we used a standard linear Kalman filter on the obstacle centers, where both position and velocity is assumed to be subject to Gaussian noise, but only the location of the object can be observed, and the observation is also assumed to be subject to Gaussian noise.

I implemented this project in C++ using the Eigen library. With some optimization efforts I got the processing time down to 2ms per frame for 5 tracked obstacles. This was a 10-20x improvement from the previous algorithm that I replaced. To be fair, my approach involved downsampling the point cloud to around 1000-2000 points. Although without downsampling (6000-20000 points), I could achieve 10-25 ms per frame, which is still a 3x improvement over the previous algorithm, which became a bit of a slideshow when too many objects appeared in the scene. For reference, the sensor delivers point clouds at a rate of 30 Hz, so we definitely hit realtime capabilities without skipping frames.

My work made it into this paper. Section 3.4 is my contribution, although I’m not credited as a co-author. At least you can find my name in the acknowlegements.

Videos

Check out some clips here. More info on the OpenGL visualization tool I wrote from scratch together with a fellow student is available here.

Tracking a ball bouncing off obstacles with filtered velocity estimation. Even though the ball connects with static obstacles, tracking is robust enough to keep them separate.

The gaussian mixture model visualized using ellipsoids.

Tracking a ball that is partially shadowed as it moves between the legs of a chair.

A more complex test scenario.

For comparison, the previous implementation.

- SSV stands for swept-sphere-volume, which are either spheres or capsules. Using SSVs simplifies collision-related computations, since all points on the surface of a SSV have constant distance to its central point or line. [return]